Unraveling Motion: A Deep Dive into Optical Flow in Computer Vision

Table Of Content

By CamelEdge

Updated on Mon Dec 02 2024

Introduction

Motion is an integral part of our lives, whether it’s noticing the movement of vehicles on the road or tracking a soccer ball in a game. Our ability to perceive, understand, and predict motion enables us to interact effectively with the dynamic world around us. In the digital world, mimicking our natural ability to perceive motion requires the analysis of movement within video frames, a technique referred to as optical flow.

In this blog, we’ll explore optical flow, its principles, challenges, and methods.

What is Optical Flow?

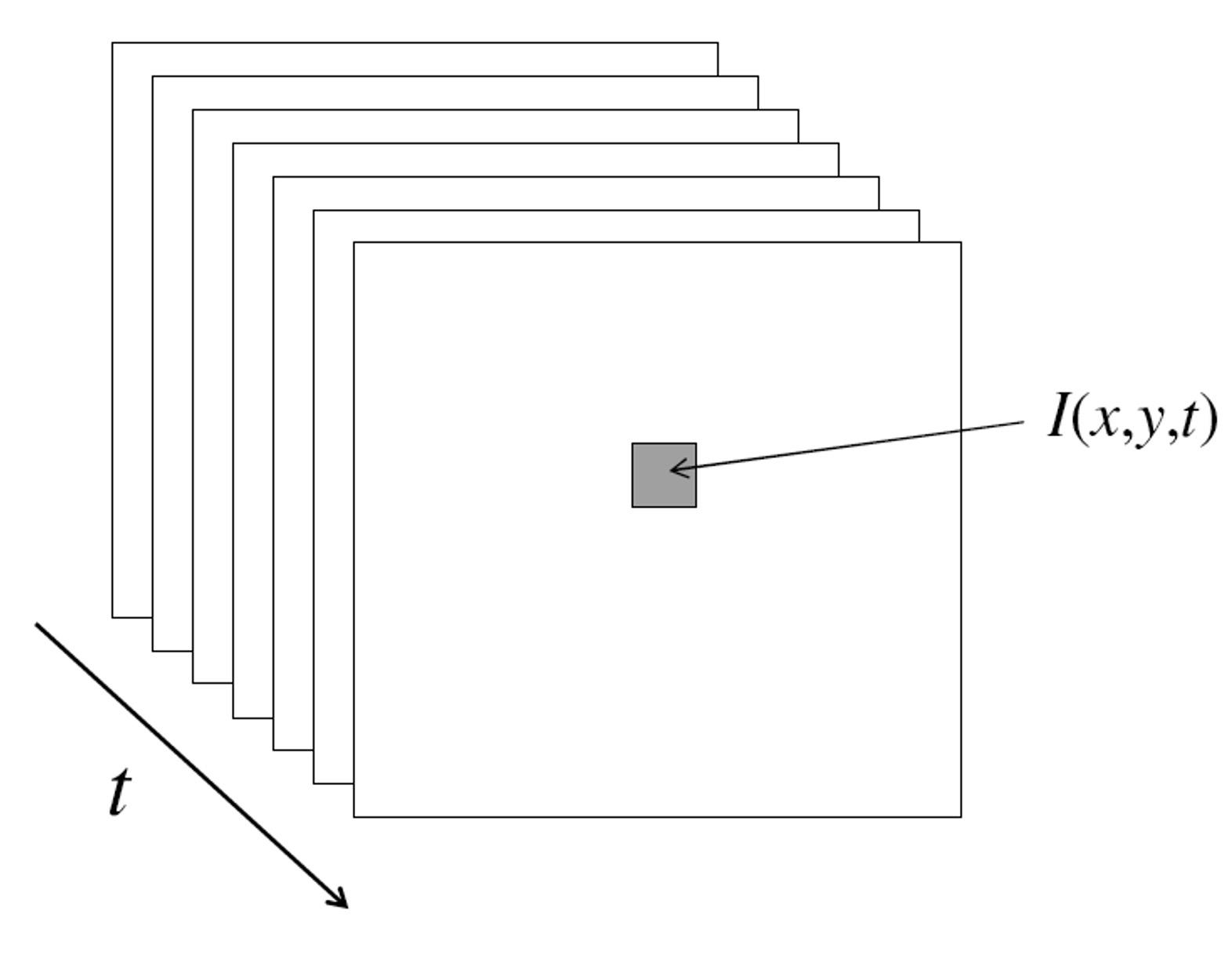

At its core, optical flow refers to the apparent motion of objects, surfaces, or edges in a visual scene, as observed through a sequence of images over time. A video can be thought of as a collection of frames captured at successive moments. In this context, the image data can be represented as a function of space and time :

Optical flow involves estimating how pixel intensities in these images move from one frame to the next. This estimation helps identify the motion patterns in a scene, which is crucial for tasks such as object tracking, scene reconstruction, and robotic navigation.

The Aperture Problem

Before diving into the methods of calculating optical flow, let’s address a fundamental challenge: the aperture problem.

The aperture problem refers to a limitation in accurately estimating the motion of objects in an image when only a small local region of the image is considered

Imagine watching a straight edge move from left to right through a small circular window. You know the edge is moving to the right, but when you look through the window, the movement suddenly seems different. Why does this happen?

Illustration of the aperture problem: A line moves from left to right, but when viewed through a small window, the perceived motion direction differs.

It’s because movement along the edge doesn’t change how bright the pixels appear—it looks the same as if the edge wasn’t moving at all. Only motion perpendicular to the edge creates visible changes in brightness that we can detect.

This limitation, known as the aperture problem, makes estimating motion in optical flow tricky. It’s a key reason why understanding motion isn’t always straightforward in computer vision.

Motion along the edge direction is not detectable

This phenomenon is also observed in the barberpole illusion.

How Do We Estimate Motion?

To compute the motion of pixels from one frame to the next, such as from to , we rely on certain key assumptions:

Brightness Constancy

This principle assumes that the intensity of a point in the image remains the same across frames. For example, a pixel in retains its brightness in .

In grayscale images, this is often referred to as brightness constancy.

where and represent the motion (optical flow) in the and directions.

Small Motion

Motion is assumed to be small, meaning that objects or points don’t move far between consecutive frames. This allows us to use mathematical approximations, such as the Taylor series expansion, to linearize the changes in the image.

Under these two assumptions, we derive the brightness constancy constraint equation as follows:

Brightness Constancy Constraint

Brightness Constancy Constraint:

This is the optical flow equation, but it presents a problem: we have one equation and two unknowns for every pixel. To solve this, additional constraints are needed.

Optical Flow Estimation

Several methods have been proposed to resolve the ambiguity in the optical flow equation. Two prominent approaches are the Horn-Schunck method and the Lucas-Kanade method.

Horn-Schunck Method

The Horn-Schunck method assumes that the optical flow is smooth across the image. This global method computes a dense optical flow field by minimizing an energy function that combines two constraints:

- Brightness Constancy: Ensures that the intensity of points doesn’t change over time.

- Smooth Flow: Ensures that the flow varies smoothly from pixel to pixel.

This method is suitable for estimating dense motion fields but can be computationally expensive, especially for large images or videos.

Lucas-Kanade Method

In contrast, the Lucas-Kanade method assumes that the optical flow is constant within small patches of the image. It uses a local window around each pixel to gather more equations (up to 25 equations for a window) and solve for and .

This method is computationally efficient and works well for tracking sparse features in an image, such as corners or points of interest.

Horn-Schunck vs. Lucas-Kanade

| Feature | Horn-Schunck | Lucas-Kanade |

|---|---|---|

| Flow Type | Dense | Sparse |

| Assumption | Smooth Flow | Constant Flow |

| Scope | Global | Local |

| Computational Cost | High | Moderate |

Horn-Schunck Method

The Horn-Schunck method is based on the principle of minimizing an energy functional that combines the brightness constancy constraint with a smoothness term. The energy functional is defined as:

The solution to this problem involves the use of variational calculus, a mathematical technique that allows us to find functions that minimize or maximize functionals. In the context of the Horn-Schunck method, variational calculus is employed to derive the Euler-Lagrange equations, which provide the necessary conditions for the optical flow fields and to minimize the energy functional. This process involves taking the functional derivative of the energy expression with respect to the flow fields and setting it to zero, leading to a set of partial differential equations that describe the optimal flow.

Lucas-Kanade

The Lucas-Kanade method is particularly effective for tracking small, localized motions in an image sequence. It operates under the assumption that the motion of the pixels within a small neighborhood is approximately constant. This allows the method to solve for the optical flow by considering multiple equations derived from the image gradients within the neighborhood.

The method can be summarized in the following steps:

- Select a Window: Choose a small window around each pixel where the flow is assumed to be constant.

- Compute Image Gradients: Calculate the spatial gradients and and the temporal gradient within the window.

- Formulate the System of Equations: For each pixel in the window, the optical flow equation is formed. If we use a window, that gives us 25 equations per pixel

- Solve for Flow: Use least squares to solve the over-determined system of equations for the flow vector .

Solubility of Lucas-Kanade When is the Lucas-Kanade method solvable? In other words, what are the characteristics of good points to track?

- The matrix should be invertible.

- should not be too small to avoid being affected by noise.

- The eigenvalues and of should not be too small.

- ATA should be well-conditioned.

- The ratio should not be too large (where is the larger eigenvalue).

The condition for the solubility of the Lucas-Kanade method, which involves ensuring that the matrix is invertible and well-conditioned, is akin to the criteria used in the Harris corner detector. In the Harris corner detector, the focus is on identifying keypoints in an image where the intensity changes significantly in all directions, which is determined by analyzing the eigenvalues of a similar matrix. Both methods rely on the properties of these eigenvalues to ensure that the points selected are suitable for tracking or corner detection, as they provide stable and reliable features.

On nice textured regions motion is well estimated because the gradient varies over the window.

Dealing with Large Motions: Coarse-to-Fine Estimation

When there’s significant motion between frames, small motion assumptions may no longer hold. To handle such cases, a coarse-to-fine strategy is often employed. It involves the following steps:

- Downsampling: The image is downsampled to create a low-resolution version, where large motions appear smaller.

- Estimating Motion: Optical flow is computed at the coarse level.

- Refinement: The flow is refined iteratively at finer resolutions, propagating information from the coarse level to the fine level.

This hierarchical approach ensures accurate motion estimation for large displacements while maintaining computational efficiency.

OpenCV (Python) based Implementation

Sparse Optical Flow: This code demonstrates how to implement sparse optical flow tracking using the Lucas-Kanade method in OpenCV. A video file is loaded, and key feature points are identified in the first frame using the cv.goodFeaturesToTrack function, which detects corners. For each subsequent frame, the cv.calcOpticalFlowPyrLK function calculates the motion of these points between the current and previous frames. Only points successfully tracked are retained, and lines are drawn to visualize their movement, along with rectangles to highlight their new positions. Random colors are assigned to points for better visualization. The process repeats until the video ends or the user presses 'q', creating an interactive display of point tracking throughout the video.

import numpy as np

import cv2 as cv

from matplotlib import pyplot as plt

cap = cv.VideoCapture('<video file>')

n = 100 # number of points to track

winSize = (15,15) # size of the window for lucas kanade

ret, frame = cap.read()

gray0 = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

# Find good features to track

points = cv.goodFeaturesToTrack(gray0, maxCorners=n, qualityLevel=0.1, minDistance=7)

color = np.random.randint(0, 255, (n, 3))

points0 = points

while cap.isOpened():

ret, frame = cap.read()

if not ret:

print("Can't receive frame (stream end?). Exiting ...")

#break

gray1 = cv.cvtColor(frame, cv.COLOR_BGR2GRAY)

points1, status, err = cv.calcOpticalFlowPyrLK(gray0, gray1, points0, None, winSize=winSize, maxLevel=3)

# Select good points

points0 = points0[status == 1]

points1 = points1[status == 1]

points = points[status == 1]

color = color.reshape(-1,1,3)[status==1]

# display a line between old position and new position of the points

for i, (p0, p1) in enumerate(zip(points, points1)):

frame = cv.line(frame, p0.astype(int), p1.astype(int), color[i].tolist(),2)

frame = cv.rectangle(frame, p1.astype(int)-winSize[0]//2,

p1.astype(int)+winSize[0]//2, color[i].tolist(), 2)

cv.imshow('frame', frame)

if cv.waitKey(1) == ord('q'):

break

gray0 = gray1

points0 = points1.reshape(-1,1,2)

points = points.reshape(-1,1,2)

cap.release()

cv.destroyAllWindows()

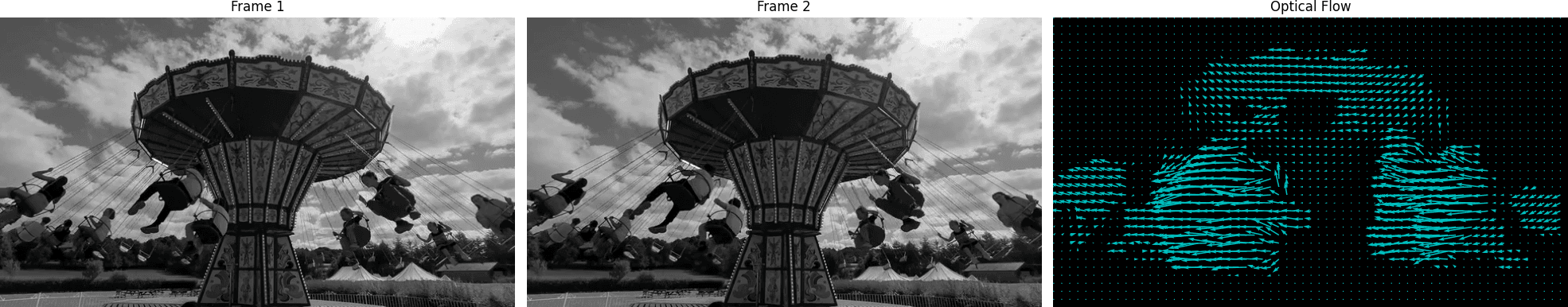

Dense Optical Flow: This code demonstrates dense optical flow estimation between two images using Farneback's method in OpenCV. Two grayscale frames are loaded, and the cv.calcOpticalFlowFarneback function computes the optical flow, representing pixel-wise motion between the two frames. The flow field contains the horizontal and vertical displacement for each pixel.

The results are visualized in three subplots: the first displays the initial frame, the second shows the subsequent frame, and the third uses a quiver plot to overlay flow vectors on a blank background. The flow vectors, sampled at every 10th pixel, highlight the direction and magnitude of motion between the frames, with cyan arrows indicating motion.

frame1 = cv.imread('<First image path>', cv.IMREAD_GRAYSCALE)

frame2 = cv.imread('<Second image path>', cv.IMREAD_GRAYSCALE)

flow = cv.calcOpticalFlowFarneback(frame1, frame2, None, 0.5, 3, 15, 3, 5, 1.2, 0)

# Create the plot

plt.figure(figsize=(20, 10))

# Display the first frame

plt.subplot(1, 3, 1)

plt.imshow(frame1, cmap='gray')

plt.title("Frame 1")

plt.axis('off')

# Display the second frame

plt.subplot(1, 3, 2)

plt.imshow(frame2, cmap='gray')

plt.title("Frame 2")

plt.axis('off')

# Display the optical flow

plt.subplot(1, 3, 3)

plt.imshow(frame1*0, cmap='gray') # Background as the first frame

x, y = np.meshgrid(

np.arange(0, flow.shape[1], 10), # x-coordinates

np.arange(0, flow.shape[0], 10) # y-coordinates

)

plt.quiver(

x, y,

flow[::10, ::10, 0], # Horizontal flow

flow[::10, ::10, 1], # Vertical flow

scale=400, color='c'

)

plt.title("Optical Flow")

plt.axis('off')

# Show the plot

plt.tight_layout()

plt.show()

Conclusion

Optical flow is a powerful tool for understanding motion in visual scenes. By leveraging techniques like the Horn-Schunck and Lucas-Kanade methods, we can estimate pixel motion and unlock a variety of applications in computer vision.

While challenges like the aperture problem and large motion estimation exist, advancements in coarse-to-fine strategies and feature detection continue to improve the robustness of optical flow methods.

FAQs

What is optical flow?

Optical flow refers to the apparent motion of objects, surfaces, or edges in a visual scene between two consecutive video frames. It is estimated by analyzing pixel intensity changes over time and space.

Why is optical flow important?

Optical flow is crucial for applications such as:

- Object tracking

- Motion detection

- Scene reconstruction

- Robotic navigation

What are the key assumptions in optical flow estimation?

Two primary assumptions are:

- Brightness constancy: The intensity of a pixel remains constant across frames.

- Small motion: Motion between consecutive frames is minimal, enabling mathematical linearization.

What is the aperture problem in optical flow?

The aperture problem arises when motion is observed through a small local region of an image. It leads to ambiguities in detecting motion direction, as only movement perpendicular to an edge is apparent.

What are the common methods for computing optical flow?

The two most widely used methods are:

- Horn-Schunck Method: Assumes smooth flow across the image and computes dense optical flow using global optimization.

- Lucas-Kanade Method: Assumes constant flow within small patches and computes sparse optical flow efficiently.

What is the brightness constancy constraint equation?

The equation is:

Here, , , and are the spatial and temporal image gradients, while and are the optical flow components.

What is the Horn-Schunck method?

The Horn-Schunck method calculates dense optical flow by minimizing an energy function that combines brightness constancy and smoothness constraints. This global approach ensures smooth motion estimation but is computationally intensive.

What is the Lucas-Kanade method?

The Lucas-Kanade method computes sparse optical flow by solving the optical flow equation within a local window. It is computationally efficient and suitable for tracking keypoints or features.

What are the conditions for the Lucas-Kanade method to work?

- The matrix (from the system of equations) should be invertible.

- Eigenvalues of should be large enough to avoid noise sensitivity.

- The matrix should be well-conditioned to ensure stability.

What is coarse-to-fine estimation in optical flow?

Coarse-to-fine estimation handles large motions by:

- Downsampling the image to reduce motion magnitude.

- Estimating optical flow at the coarse level.

- Refining the flow iteratively at finer resolutions.

How does optical flow relate to the Harris corner detector?

Both methods use eigenvalues of a gradient-based matrix to identify stable features in an image. The Lucas-Kanade method relies on well-conditioned eigenvalues for reliable flow computation, similar to corner detection criteria in the Harris detector.

What are the challenges in estimating optical flow?

- The aperture problem.

- Ambiguities in regions with uniform intensity.

- High computational costs for dense motion estimation.

- Handling large displacements or rapid motion between frames.

What is the barberpole illusion?

The barberpole illusion demonstrates the aperture problem by showing how motion along an edge is perceived differently when viewed through a restricted window, making motion direction ambiguous.

Test Your Knowledge

1/9

What is the primary goal of optical flow in computer vision?