Feature Detectors and Descriptors: The Backbone of Computer Vision

Table Of Content

By CamelEdge

Updated on Sat Aug 10 2024

Introduction

In computer vision, feature detection and matching is essential tools for analyzing images. They allow computers to identify and understand the key elements within an image, which is crucial for tasks like object recognition, image matching, and image stitching.

Local Feature Extraction

There are three steps invovled.

- Detection

Feature detection is the first step in the process, where we identify key points or regions in an image that are distinctive and useful for further analysis. Some common feature detectors include:

-

Harris Corner Detector: This detector identifies points where the image intensity changes significantly in different directions, often corresponding to corners in the image. Corners are particularly useful because they provide a strong and consistent feature that is less sensitive to minor changes in the image.

-

Blob Detection: The Laplacian of Gaussian (LoG) filter detects regions of rapid intensity change by first smoothing the image with a Gaussian filter and then applying the Laplacian operator. This approach is effective for finding blobs and other areas where the intensity changes significantly.

- Description

Once distinctive points are identified, the next step is to compute a descriptor for each detected interest point. A descriptor captures the local appearance of the point, allowing for robust comparison across different images. A popular descriptor is:

- SIFT (Scale-Invariant Feature Transform): SIFT is a powerful algorithm that describes each key point by computing the gradient orientations and magnitudes in the local neighborhood. This description is invariant to scale and rotation, making SIFT robust to changes in image scale and orientation.

- Matching

The final step involves matching features between images by comparing their descriptors. This process typically includes Distance Calculation. To find correspondences, we compute the distance between feature vectors (descriptors). Common distance metrics include Euclidean distance or more advanced techniques like the Hamming distance for binary descriptors. The goal is to find pairs of points with similar descriptors in different images.

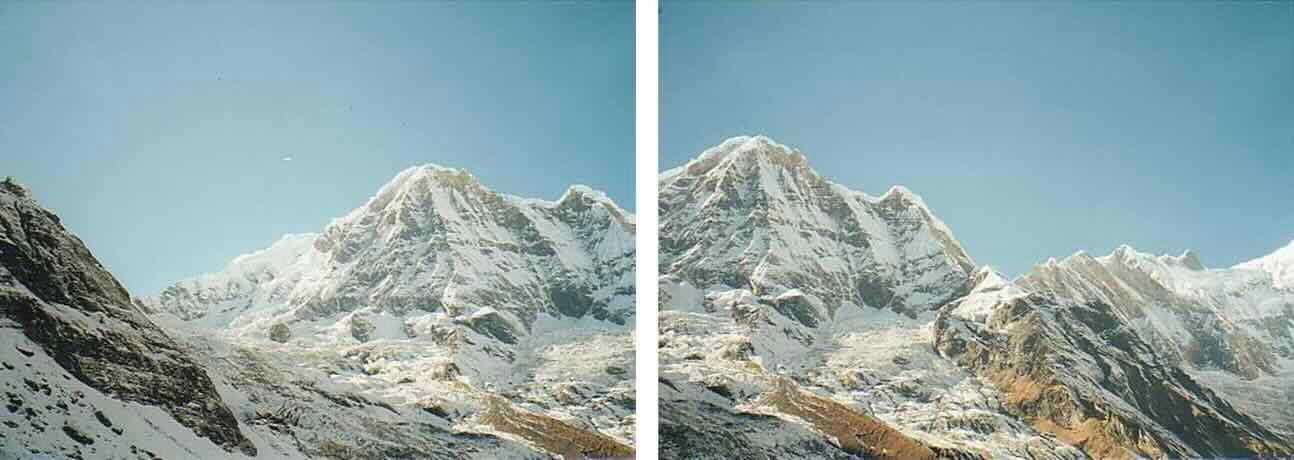

Image Source: Darya Frolova, Denis Simakov

SIFT (Scale-Invariant Feature Transform)

The Scale-Invariant Feature Transform (SIFT) is one of the most well-known and widely used algorithms in computer vision for detecting and describing local features in images. Developed by David Lowe in 1999, SIFT has been instrumental in enabling robust and reliable matching of images under various transformations and distortions.

SIFT works by identifying and describing key points in an image that are invariant to scale, rotation, and partial illumination changes. Here is how it works:

SIFT Detector:

This process is similar to applying the Laplacian of Gaussian (LoG), a filter used to detect blobs in an image by finding regions of rapid intensity change. However, instead of directly using LoG, SIFT approximates it using the Difference of Gaussian DoG. The DoG is created by applying Gaussian blurring to the image at multiple scales and then subtracting adjacent blurred images. The approximation with DoG is computationally more efficient and effectively captures the essence of LoG, making it suitable for detecting stable key points that are invariant to image scaling.

Key points are identified by detecting maxima and minima in the Difference of Gaussian (DoG) scale space. This involves comparing each point in the image with its eight neighboring pixels at the same scale and with nine neighboring pixels at the scales directly above and below.

SIFT Descriptor: The primary goal of SIFT is to facilitate image matching despite significant transformations. To recognize the same keypoint across different images, we match appearance descriptors, or 'signatures,' within their local neighborhoods. By using descriptors that are invariant to scale and rotation, SIFT effectively manages a wide range of global transformations. This process involves calculating the relative orientation and magnitude within a 16x16 neighborhood around each keypoint, then forming weighted histograms with 8 bins for each 4x4 region. The weights are determined by gradient magnitude and a spatial Gaussian function. Finally, these 16 histograms are concatenated into a single 128-dimensional vector, forming a robust descriptor for each keypoint.

Implementation

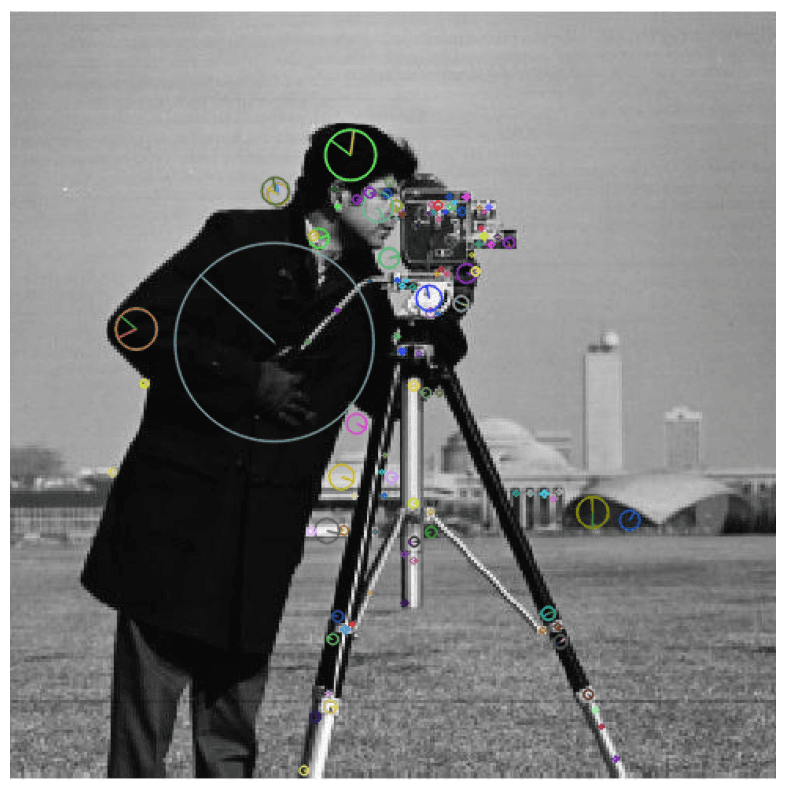

We can use OpenCV to detect SIFT features. The following code demonstrates how to do this.

import cv2

from matplotlib import pyplot as plt

image = cv2.imread('<Image path>', cv2.IMREAD_GRAYSCALE)

sift = cv2.SIFT_create(nfeatures = 150)

kp = sift.detect(image)

des = sift.compute(image, kp)

plt.figure(figsize = (10,10))

img = cv2.drawKeypoints(image, kp, -1, flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

plt.imshow(img), plt.axis('off')

Characteristics of Good Features

SIFT (Scale-Invariant Feature Transform) features are considered effective due to their following characteristics:

Repeatability: SIFT features can be consistently identified across different images, even when the images undergo various geometric transformations (such as scaling and rotation) or photometric changes (such as changes in lighting).

Saliency: SIFT features are distinctive and located in regions of the image that are considered "interesting" or important, making them stand out from the surrounding areas.

Compactness and Efficiency: SIFT features are fewer in number compared to the total number of image pixels, which makes them computationally efficient and practical for matching and recognition tasks.

Locality: Each SIFT feature is associated with a small local region of the image, which enhances their robustness against clutter and occlusion, as the features are not significantly affected by changes in the surrounding areas.

Applications of SIFT

SIFT's robustness and reliability make it ideal for a variety of computer vision tasks, including:

Image Matching: SIFT can be used to match features between different images, even if they are taken from different angles, scales, or lighting conditions. This is useful in object recognition, panorama stitching, and 3D reconstruction.

Object Recognition: By matching key points between an image and a database of known objects, SIFT can identify and locate objects within an image.

Robust Tracking: SIFT descriptors can track features across video frames, enabling applications in motion analysis and augmented reality.

Other Feature Detectors and Descriptors

Here’s a polished version:

SURF (Speeded-Up Robust Features): An optimized, faster version of SIFT, designed to improve the speed of feature detection and description while maintaining robustness.

FAST (Features from Accelerated Segment Test): A high-speed corner detection method suitable for real-time applications, offering rapid feature detection.

BRIEF (Binary Robust Independent Elementary Features): Provides efficient binary descriptors for feature matching, reducing memory usage and computational time while still achieving high recognition accuracy.

ORB (Oriented FAST and Rotated BRIEF): A cost-free alternative to SIFT and SURF, combining the FAST feature detector with the BRIEF descriptor to offer a fast, robust solution for feature detection and description.

FAQs

Q: What are the key steps involved in local feature extraction?

A: Local feature extraction involves three key steps:

- Detection: Identifying distinctive key points or regions in an image.

- Description: Computing a descriptor that captures the local appearance of each key point.

- Matching: Comparing feature descriptors between images to find correspondences.

Q: Can you explain the concept of SIFT (Scale-Invariant Feature Transform)?

A: SIFT is a widely used algorithm for detecting and describing local features in images. It identifies key points that are invariant to scale, rotation, and partial illumination changes. SIFT uses the Difference of Gaussian (DoG) to approximate the Laplacian of Gaussian (LoG) filter, making it computationally efficient for detecting stable key points. The descriptors created are robust to various transformations, allowing for effective image matching.

Q: What are the characteristics of good SIFT features?

A: Good SIFT features have the following characteristics:

- Repeatability: Consistent identification across different images, even with transformations.

- Saliency: Distinctive features located in significant areas of the image.

- Compactness and Efficiency: Fewer features than image pixels, making them computationally efficient.

- Locality: Features occupy small areas, making them robust to clutter and occlusion.

Q: What are some applications of SIFT?

A: SIFT is used in various computer vision tasks, including:

- Image Matching: Matching features across different images.

- Object Recognition: Identifying and locating objects within images.

- Robust Tracking: Tracking features across video frames for applications like motion analysis and augmented reality.

Related Posts

- Edge Detection

- Corner Detection

- Blob Detection

- Image Filtering

- Image Formation

- What is Computer Vision

Test Your Knowledge

1/10

What is the primary purpose of feature detection in computer vision?